Applies for Netbackup version : 3.x 4.x 5.x 6.x and 7.x

Any site using Netbackup should use time to configure and adjust the BPTM buffers. It does improve performance – a LOT.

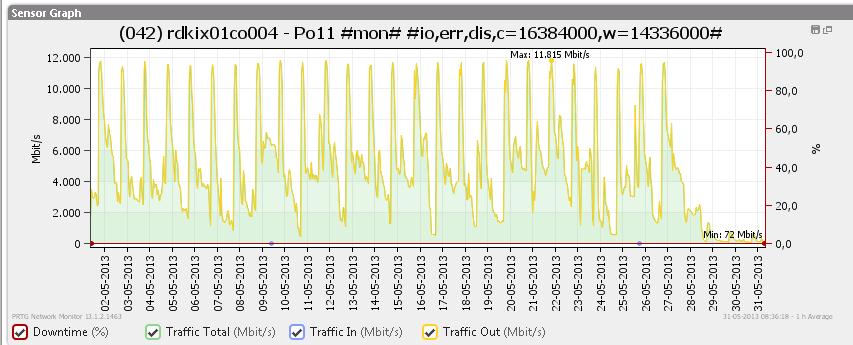

Just to show what a difference the buffer settings really do for a LTO3 drive:

Before tuning: 19MB/sec (default values)

Second tuning attempt: 49MB/sec (using 128K block size, 64 buffers)

Final result: 129MB/sec (using 128K block size and 256 buffers)

Since it’s a LOT3 drive have a native transfer rate of 80MB/sec any further tuning attempt are meaningless.

Buffer tuning advice could not be found anywhere in the Netbackup manuals until NBU5.x was released. Its now firmly documented in tech notes and the in “Netbackup backup planning and performance tuning guide”

All Net backup installations have a directory in /usr/openv/netbackup called db. The db directory is home for various configurations files, and the files for controlling the memory buffer size and the amount of buffers therefore lives here. For a full understanding of what the settings control, I need to be a little technical. All incoming backup data from the network are not written to tape immediately. It’s buffered in memory. If you ever have wondered why Netbackup activity monitor show files being written even if no tape was mounted, this is why.

Data are received by a bptm process and written into memory. The bptm process works on a block basic. Once a block is full, it’s written to tape by another bptm process. Since the memory block size is equal to the SCSI block size on tape this, we need to take care !!. By using bigger block size we can improve tape writes by reducing system overhead. To small a block sizes causes extensive repositioning (shoe shining) which also leads to:

- Slow backups because the tape drive will use time for repositioning instead of writing data.

- Increased wear on media and tape drive. Because of multiple tapes passes of the R/W head.

- Increased build up of magnetic martial on the Read/Write head. Often a cleaning tape can’t remove this dirt!!

Netbackup config files for block size is controlled by SIZE_DATA_BUFFERS and NUMBER_DATA_BUFFERS. SIZE_DATA_BUFFERS defines the size (see table below) and NUMBER_DATA_BUFFERS controls how may buffers are reserved for each stream. Memory used for buffers are allocated in shared memory. Shared memory can not be paged or swapped.

|

32K block size |

32768 |

|

64K block size |

65536 |

|

128K block size |

131072 |

|

256K block size (default size) |

262144 |

As far as I know you can’t set the value to anything higher than 256KB because it is the biggest supported SCSI block size. To ensure enough free memory, use this formula to calculate memory consumption:

number of tape drives * MPX * SIZE_DATA_BUFFERS * NUMBER_DATA_BUFFERS = TOTAL AMOUNT OF SHARED MEMORY

or a real world example

8 tape drive * 5 MPX streams * 128K block size * 128 buffers per stream = 655MB

If all drives and streams are in use, total memory usage are 655 MB (shared memory). Just remember that this amount change if any parameters are changed e.g. number of rives, larger MPX setting etc. etc. I think that reasonable NUMER_DATA_BUFFERS value would be 256. The value must be a power of 2.

Configuring SIZE_DATA_BUFFERS & NUMBER_DATA_BUFFERS:

The cookbook (the bullet proof version). We assume that the wanted configuration is 256K block size and 256 memory buffers per stream

touch /usr/open/netbackup/db/config/SIZE_DATA_BUFFERS

touch /usr/open/netbackup/db/config/NUMBER_DATA_BUFFERS

echo 262144 >> /usr/open/netbackup/db/config/SIZE_DATA_BUFFERS

echo 256 >> /usr/open/netbackup/db/config/NUMBER_DATA_BUFFERS

The configuration files needs to be created on all media servers. You don’t need to bounce any daemons. The very next backup will use the new settings. But be carefully to set the SIZE_DATA_BUFFERS value right. A misconfigured value will impact performance negative. Last thing to do is verifying the settings. We can get the information from the bptm logs in /usr/openv/netbackup/logs/bptm. Look for the io_init messages in BPTM log.

00:03:25 [17555] <2> io_init: using 131072 data buffer size

00:03:25 [17555] <2> io_init: CINDEX 0, sched Kbytes for monitoring = 10000

00:03:25 [17555] <2> io_init: using 24 data buffers

The io_init messages show up for every new bptm process. The value in the brackets is the PID. If you need to see what happened, do a grep on the PID.

Getting data back – NUMBER_DATA_BUFFERS_RESTORE

One thing is storing data on tape, getting them back fast is more important. When restoring data the BPTM process look for a file NUMBER_DATA_BUFFETS_RESTORE. Default value i 8 buffers, after my opinion this is WAY TO LOW. Use a value of 256 or larger. To verify grep for “mpx_restore_shm_:”

3:08:14.308 [3328] <2> mpx_setup_restore_shm: using 512 data buffers, buffer size is 262144

Disk Buffers NUMBER_DATA_BUFFERS_DISK.

Work’s the same way as NUMBER_DATA_BUFFERS. In NBU 5.1 the default buffer size has been raised to 256KB (largest SCSI block size possible). You can however lower that value with SIZE_DATA_BUFFERS_DISK. If NUMBER_BUFFERS_DISK/SIZE_DATA_BUFFERS_DISK doesn’t exists values from NUMBER_DATA_BUFFERS/SIZE_DATA_BUFFERS are used.

Change the settings on all media servers

If you changes the SIZE_DATA_BUFFERS, please change the value on all media servers, else you will run into issues during image replication when source and destination uses different values.

Do backup/restore test.

Since Netbackup do automatic block size determination, everything should work without any problem. However, please do backup/restore test.